Generative Models Explained: VAEs, GANs, Diffusion, Transformers, Autoregressive Models & NeRFs

Generative models have revolutionized artificial intelligence by enabling machines to create new content—be it images, text, audio, or 3D structures. This article delves into six prominent generative models: Variational Autoencoders (VAEs), Generative Adversarial Networks (GANs), Diffusion Models, Transformers, Autoregressive Models, and Neural Radiance Fields (NeRFs). We’ll explore their architectures, strengths, weaknesses, and real-world applications.

Read more: Agentic AI Trends in 2025: Navigating the Future of Autonomous Intelligence

What Are Generative Models?

Generative models are a class of machine learning models that learn the underlying distribution of a dataset in order to generate new data samples that resemble the original input data. Unlike discriminative models, which predict labels or outcomes given input data, generative models aim to create new content—such as images, text, audio, or 3D structures—based on the patterns they have learned.

At their core, generative models answer this question:

“Given what I know about the data, how can I produce something new that still fits the same distribution?”

Key Characteristics:

-

Learning the data distribution: Generative models try to estimate

, the probability of the data itself, rather than

, which is what classifiers do.

-

Sampling capabilities: Once trained, they can generate new, previously unseen samples that look like they came from the original dataset.

-

Latent space understanding: Many generative models use a latent (hidden) representation of data to generate meaningful variations of inputs.

Why Are Generative Models Important?

Generative models have become foundational to the development of AI applications such as:

-

Image and video synthesis

-

Text and code generation

-

Drug discovery and molecular design

-

3D scene reconstruction

Popular examples of generative models include VAEs, GANs, Diffusion Models, Transformers, Autoregressive Models, and Neural Radiance Fields (NeRFs)—each offering unique advantages and trade-offs depending on the use case.

6 Common Types of Generative Models

1. Variational Autoencoders (VAEs)

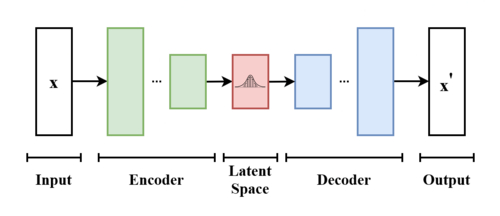

Variational Autoencoders (VAEs) are a type of generative model that learns to compress data into a latent (hidden) representation and then reconstruct it, enabling the generation of new, similar data samples. Introduced by Kingma and Welling in 2013, VAEs are widely used in image synthesis, anomaly detection, and representation learning.

How VAEs Work

VAEs consist of two main components:

-

Encoder: This neural network maps input data (like an image or a sentence) to a latent space—a lower-dimensional space that captures the most important features. Instead of mapping to a single point, it learns a probability distribution (usually Gaussian) characterized by a mean and standard deviation.

-

Decoder: This network takes a sample from the latent distribution and reconstructs it back into the original data format.

The training objective is to minimize two losses:

-

Reconstruction loss: Ensures the decoder can accurately reconstruct the input.

-

KL-divergence loss: Encourages the latent distributions to be close to a standard normal distribution, making sampling possible.

Key Features of VAEs

-

Probabilistic nature: VAEs model data uncertainty using distributions, which allows for more diverse outputs.

-

Smooth latent space: Small changes in latent variables yield smooth changes in the output, useful in creative tasks like style interpolation.

-

Unsupervised learning: No need for labeled data to learn a structured representation.

Strengths

-

Stable and straightforward training process.

-

Provides a structured latent space suitable for interpolation and data exploration.

-

Effective for anomaly detection and representation learning.

Weaknesses

-

Often produces blurrier outputs compared to GANs.

-

May struggle with capturing fine-grained details in complex data.

Applications of VAEs

-

Image generation: Generate new faces, objects, or handwriting styles.

-

Anomaly detection: Identify outliers by measuring reconstruction errors.

-

Representation learning: Learn meaningful compressed features of data.

-

Data imputation: Fill in missing values in data with plausible guesses.

2. Generative Adversarial Networks (GANs)

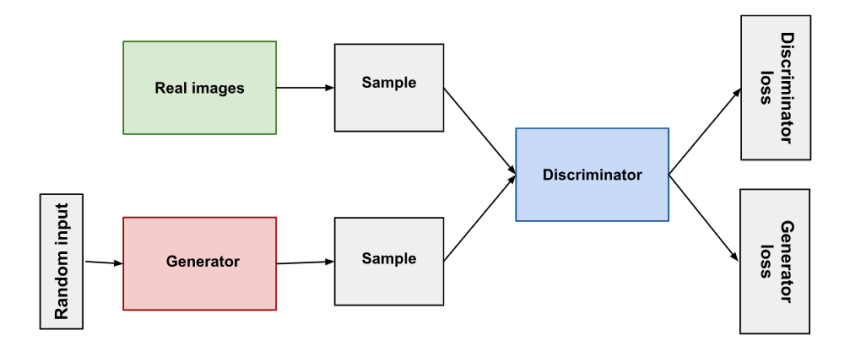

Generative Adversarial Networks (GANs) are a class of generative models introduced by Ian Goodfellow and his collaborators in 2014. They are designed to generate realistic data samples—such as images, text, or audio—by training two neural networks in a competitive setting. GANs have revolutionized content creation and are widely known for producing high-fidelity synthetic data.

How GANs Work

GANs are made up of two main components that play a game against each other:

-

Generator: This network takes random noise as input and tries to produce data that resemble the real data distribution. Its goal is to “fool” the discriminator by generating realistic outputs.

-

Discriminator: This network evaluates whether the data it receives is real (from the dataset) or fake (produced by the generator). It acts as a binary classifier.

The two networks are trained simultaneously:

-

The generator improves by learning how to create more convincing fakes.

-

The discriminator improves by learning to better distinguish between real and fake data.

This adversarial process continues until the generator produces outputs that the discriminator cannot reliably tell apart from real data.

Key Features of GANs

-

Adversarial training: This setup leads to very sharp and realistic outputs.

-

No explicit likelihood: Unlike VAEs, GANs don’t require a likelihood function, making them more flexible in some domains.

-

Difficult training dynamics: The training process can be unstable and sensitive to hyperparameters.

Strengths

-

Capable of generating high-resolution, realistic images.

-

Effective in data augmentation and style transfer tasks.

-

Flexible architecture adaptable to various data types.

Weaknesses

-

Training can be unstable and sensitive to hyperparameters.

-

Prone to mode collapse, where the generator produces limited varieties of outputs.

Applications of GANs

-

Image synthesis: Create hyper-realistic images of people, places, or objects (e.g., ThisPersonDoesNotExist.com).

-

Style transfer: Transform the style of an image (e.g., turning photos into paintings).

-

Super-resolution: Increase image resolution while maintaining quality.

-

Data augmentation: Generate more training data to improve model performance.

-

Text-to-image generation: Convert descriptive text into coherent images (e.g., with DALL·E).

Variants of GANs

Over the years, many variants have been developed to address GANs’ limitations and expand their capabilities:

-

DCGAN (Deep Convolutional GAN) – Improved performance on image generation using CNNs.

-

CycleGAN – Enables image-to-image translation without paired training data.

-

StyleGAN – Known for generating photorealistic images with controllable style features.

-

Wasserstein GAN (WGAN) – Improves training stability by using a different loss function.

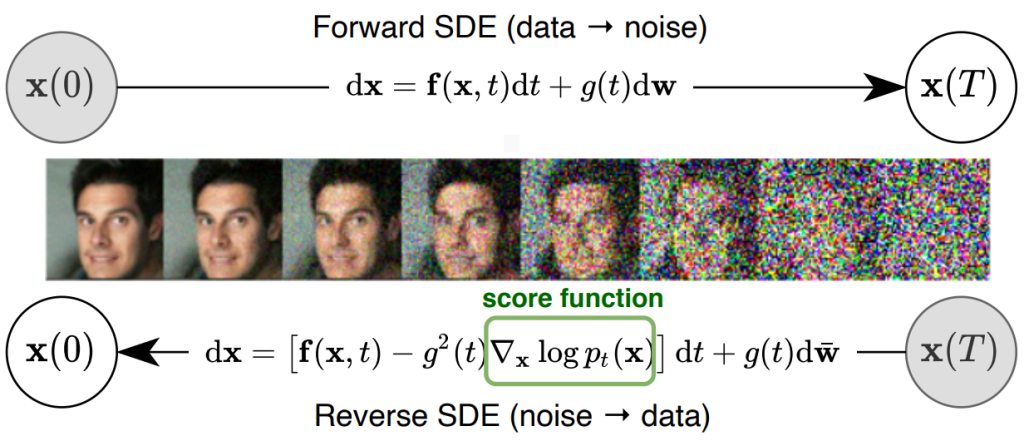

3. Diffusion Models

Diffusion models are a class of generative models that create data through a two-step process of gradually adding and then removing noise. Inspired by non-equilibrium thermodynamics, these models have gained massive popularity for their ability to generate high-quality, diverse samples—particularly in image synthesis.

How Diffusion Models Work

Diffusion models operate in two main phases:

-

Forward process (diffusion): Starting with real data, noise is progressively added over many steps until the data becomes nearly pure Gaussian noise.

-

Reverse process (denoising): A neural network is trained to reverse this diffusion process, step-by-step, transforming noise back into coherent data.

This reverse process is learned so that, during generation, the model can start with random noise and iteratively denoise it to produce realistic samples.

Key Features

-

Stability: Diffusion models are generally more stable to train compared to GANs.

-

High-quality output: Recent models like Stable Diffusion and DALL·E 3 produce photorealistic images with detailed control.

-

Slow sampling: The generation process is computationally expensive, though newer approaches like DDIM and Latent Diffusion reduce this burden.

Strengths

-

Produces diverse and high-fidelity outputs.

-

More stable training compared to GANs.

-

Effective in capturing complex data distributions.

Weaknesses

-

Slower inference time due to iterative sampling.

-

Computationally intensive, requiring significant resources.

Applications

-

Image generation (e.g., Stable Diffusion, Imagen)

-

Text-to-image synthesis

-

Audio generation (e.g., DiffWave)

-

Molecular design in drug discovery

Notable Diffusion Models

-

DDPM (Denoising Diffusion Probabilistic Model)

-

Stable Diffusion

-

Imagen by Google

-

DALL·E 3 (uses a modified diffusion approach)

Diffusion models now rival GANs in quality and have surpassed them in stability and fine control, especially in conditional generation tasks.

4. Transformers

Transformers are a deep learning architecture introduced by Vaswani et al. in 2017 with their groundbreaking paper, “Attention Is All You Need.” Originally designed for sequence modeling tasks in NLP, transformers are now the foundation of nearly all state-of-the-art generative models, including LLMs (Large Language Models) and multimodal models.

How Transformers Work

Transformers rely on a mechanism called self-attention, which allows each token in a sequence to dynamically focus on other tokens. The architecture consists of layers of:

-

Multi-head self-attention

-

Feedforward networks

-

Layer normalization and residual connections

This design allows transformers to model long-range dependencies efficiently and in parallel, unlike traditional RNNs or LSTMs.

Generative Use of Transformers

Transformers can be trained autoregressively (like GPT models) or bidirectionally (like BERT for understanding tasks). In generative settings, they predict the next token in a sequence, making them ideal for:

-

Text generation (e.g., ChatGPT, Claude, Gemini)

-

Code generation (e.g., Codex, Code Llama)

-

Image generation (e.g., Parti, a vision transformer-based model)

-

Audio and music synthesis

Key Features

-

Scalable: Capable of handling massive datasets with billions of parameters.

-

Versatile: Used across text, image, audio, and multimodal domains.

-

Fine-tunable: Can be adapted to specialized tasks with minimal data.

Strengths

-

Handles long-range dependencies in data effectively.

-

Highly parallelizable, leading to faster training times.

-

Versatile across different data modalities.

Weaknesses

-

Requires large datasets and computational resources.

-

May struggle with tasks requiring fine-grained spatial details without architectural modifications.

Applications

-

Natural language generation

-

Translation

-

Summarization

-

Question answering

-

Multimodal generation (text-to-image, video, audio, code)

Examples of Generative Transformer Models

-

GPT-4 / GPT-4 Turbo

-

Claude 3

-

Gemini 1.5

-

LLaMA 3

-

PaLM 2

-

ERNIE Bot

-

Orca

-

Mistral

-

Tülu 3

-

Phi-3

-

Vicuna

Transformers have become the gold standard in generative AI. Their modular, scalable architecture is the backbone of both open-source and commercial generative systems today.

Read more: Top 40 Large Language Models (LLMs) in 2025: The Definitive Guide

5. Autoregressive Models

Autoregressive (AR) models are a foundational class of generative models that predict the next element in a sequence based on previously observed elements. These models break down complex data generation tasks into a sequence of simpler predictions.

How Autoregressive Models Work

The core principle behind AR models is the chain rule of probability, which decomposes a joint probability distribution over a sequence into a product of conditional probabilities:

P(x) = P(x₁) * P(x₂ | x₁) * P(x₃ | x₁, x₂) * … * P(xₙ | x₁, …, xₙ₋₁)

This makes AR models especially suited to tasks like:

-

Language modeling

-

Speech synthesis

-

Time-series forecasting

-

Image generation (pixel-by-pixel)

Each step in the generation is dependent on prior steps, which introduces sequential dependency but limits parallelism during inference.

Notable Autoregressive Models

-

GPT Series: The Generative Pre-trained Transformers (GPT-2, GPT-3, GPT-4) are quintessential autoregressive language models trained to predict the next token.

-

PixelRNN / PixelCNN: Early autoregressive models for image generation.

-

Wavenet: An autoregressive model for speech generation developed by DeepMind.

-

XLNet: Combines autoregressive training with permutation-based language modeling.

-

Transformer-XL: Extends transformers with longer context windows for better sequence learning

Strengths

-

Excellent at sequence generation with high fidelity.

-

Easy to interpret and sample from.

-

Strong performance on next-token prediction tasks.

Limitations

-

Slow inference: Tokens must be generated one at a time.

-

Context bottleneck: Especially for longer sequences.

-

Limited parallelism: Unlike models like diffusion or VAEs.

Applications

-

Text generation and completion.

-

Speech synthesis and audio generation.

-

Time-series forecasting.

6. Neural Radiance Fields (NeRFs)

NeRFs, short for Neural Radiance Fields, are a powerful type of generative model designed for synthesizing novel views of complex 3D scenes from a set of 2D images. Introduced by researchers at UC Berkeley and Google in 2020, NeRFs have rapidly revolutionized the field of 3D computer vision and graphics.

How NeRFs Work

NeRFs represent a 3D scene using a neural network that takes as input a 3D coordinate and a viewing direction and outputs:

-

Volume density (i.e., how much light is blocked)

-

Radiance (RGB color)

By querying this network many times along rays cast from a virtual camera, NeRFs synthesize highly detailed and accurate 2D images from any viewpoint, using volumetric rendering.

Key Concepts

-

Implicit representation: NeRFs do not store point clouds or meshes explicitly, but instead encode the scene in the parameters of a neural network.

-

View synthesis: They can generate new images of the same scene from novel angles.

-

Volumetric rendering: Simulates light accumulation through a semi-transparent volume.

Strengths

-

Generates high-quality 3D representations from 2D images.

-

Captures intricate details and lighting conditions.

-

Advances in real-time rendering have improved efficiency.

Weaknesses

-

Computationally intensive, especially for high-resolution outputs.

-

Requires multiple images from different viewpoints for optimal performance.

Applications

-

Virtual reality (VR) and augmented reality (AR)

-

Gaming and animation

-

Cultural heritage preservation

-

Autonomous driving (scene understanding)

Notable Extensions

-

Instant-NGP (NVIDIA): Improves training and rendering speed significantly.

-

Mip-NeRF: Addresses aliasing artifacts.

-

Dynamic NeRFs: Extends NeRFs to dynamic scenes.

-

NeRF-W: Handles unstructured photos from the internet.

Comparison of Generative Models

| Model Type | Key Idea | Strengths | Limitations | Common Use Cases | Training Complexity |

|---|---|---|---|---|---|

| VAE (Variational Autoencoder) | Encodes data to a latent space and reconstructs it with probabilistic decoder | – Structured latent space- Stable training- Easy interpolation | – Blurry outputs- Lower fidelity than GANs | Image generation, anomaly detection, data compression | Moderate |

| GAN (Generative Adversarial Network) | Uses generator–discriminator game to generate realistic data | – Sharp, realistic outputs- High sample quality | – Mode collapse- Difficult training- No latent space control | Image synthesis, super-resolution, deepfake creation | High |

| Diffusion Model | Gradually denoises data starting from random noise | – State-of-the-art image quality- Stable training | – Slow sampling time- High computational cost | Text-to-image (e.g., Stable Diffusion, DALL·E), audio | Very High |

| Transformer | Self-attention-based model for sequential and non-sequential data | – Scalability- Works for text, images, audio, video | – Data and compute intensive- Limited interpretability | Text generation, image captioning, code, translation | Very High |

| Autoregressive Model | Predicts next element based on previous ones | – High accuracy in sequence tasks- Great for language modeling | – Slow inference- Not ideal for parallel generation | Language models (GPT), music, code, time series | High |

| NeRF (Neural Radiance Fields) | Uses neural nets to synthesize 3D views from 2D images | – Photorealistic 3D views- Few input images needed | – Static scenes only- Slow rendering/training | 3D reconstruction, AR/VR, robotics, mapping | Very High |

Conclusion

Generative models have evolved dramatically over the past few years, each model architecture serving a unique set of use cases:

-

VAEs enable smooth latent spaces and controlled generation.

-

GANs provide sharp outputs but are challenging to train.

-

Diffusion models offer stability and high quality, becoming a new favorite.

-

Transformers dominate text, code, and multimodal generation.

-

Autoregressive models remain best-in-class for sequence prediction.

-

NeRFs extend generative power to 3D reconstruction and rendering.

As these technologies continue to converge and evolve, the next generation of generative AI will likely combine multiple paradigms—blending the structured latent spaces of VAEs, the high fidelity of GANs, the flexibility of transformers, and the spatial understanding of NeRFs.