How to Detect and Prevent Deepfakes in 2025: A Complete Guide for the AI Era

The rise of generative AI has brought forth revolutionary innovations across industries, from automated content creation to personalized recommendations. However, alongside these benefits, a darker challenge has emerged—deepfakes. These synthetic videos, images, or audio recordings generated by AI can be nearly indistinguishable from real ones, making them powerful tools for misinformation, fraud, and identity theft.

As deepfake incidents rise in frequency and sophistication, preventing their spread has become a priority for businesses, governments, and individuals alike. In this article, we explore how to detect and prevent deepfakes in the era of generative AI by diving deeper than current resources available online, offering practical insights backed by technology, policy, and behavioral strategies.

Understanding Deepfakes: What Are They?

Deepfakes are media generated or altered using deep learning models, particularly Generative Adversarial Networks (GANs), to make it appear as if someone said or did something they never actually did.

Types of Deepfakes:

-

Video Deepfakes: Swap faces or manipulate body movements.

-

Audio Deepfakes: Clone voices to fake conversations or commands.

-

Image Deepfakes: Alter faces or generate fake identities.

-

Text-based Deepfakes: Mimic writing styles using language models.

These types of content pose serious security and ethical concerns, especially when they go viral or are used in sensitive contexts like politics, finance, or law enforcement.

How Generative AI Fuels Deepfakes

The rapid advancement of generative AI models like StyleGAN, DALL·E, and voice cloning systems has made the creation of deepfakes easier and more convincing than ever before.

Key Technologies Enabling Deepfakes:

-

Generative Adversarial Networks (GANs): Consist of two neural networks—the generator and the discriminator—that compete to produce realistic synthetic content.

-

Autoencoders: Encode real media and reconstruct it with altered features.

-

Text-to-Image and Text-to-Video models: Generate synthetic media from text descriptions.

-

Synthetic Voice Cloning: AI models can now replicate someone’s voice from as little as 30 seconds of audio.

What makes these technologies dangerous in malicious hands is that they are accessible—many are open-source or available through easy-to-use platforms.

Why Deepfakes Are Dangerous

The implications of deepfakes extend far beyond entertainment or novelty. Here’s why they matter:

1. Misinformation and Fake News

Deepfakes have been used to impersonate political figures and spread disinformation during elections.

2. Fraud and Identity Theft

AI-generated voices and videos are being used to trick employees or bank officials into transferring money or sharing sensitive information.

3. Reputation Damage and Blackmail

Fake pornographic videos and defamatory content can ruin careers and mental health.

4. Legal and National Security Threats

Fake evidence could be presented in legal trials or used in espionage.

These risks highlight the urgent need for strong detection and prevention strategies.

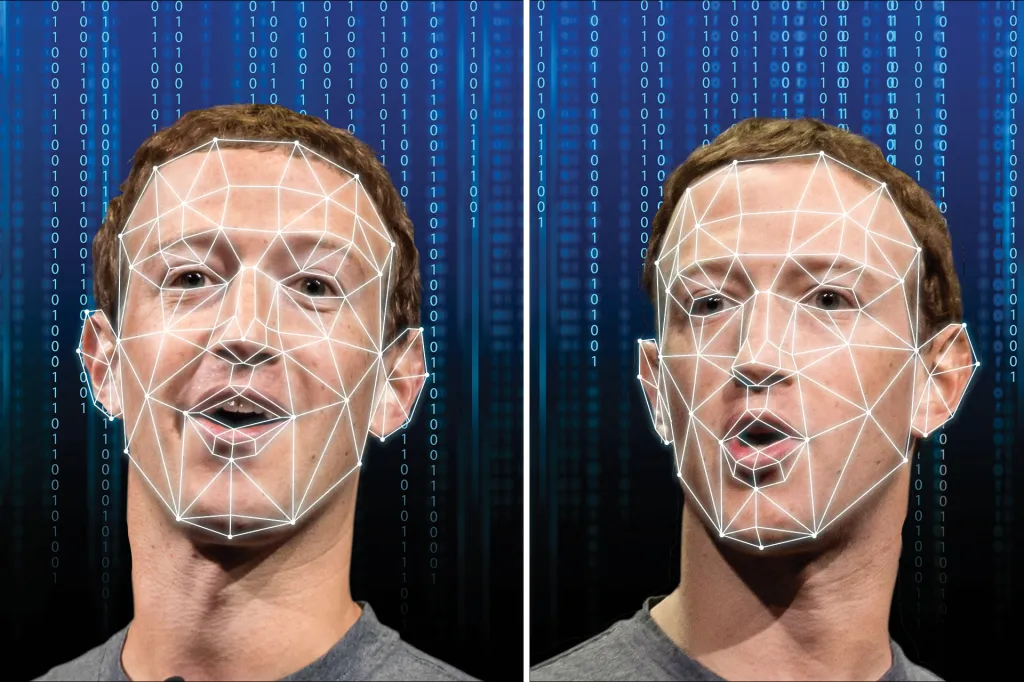

How to Detect Deepfakes Manually and Using AI

How to Detect Deepfakes Manually

While deepfakes are becoming increasingly realistic, most still contain subtle flaws that humans can spot with careful observation. Here’s how to do it:

1. Inconsistent Facial Movements

-

What to look for: Jittery or stiff head movement, unnatural blinking, or overly smooth facial transitions.

-

Why it matters: Many deepfakes struggle with natural muscle coordination and micro-expressions.

2. Unnatural Eye Movement or Blinking

-

What to look for: Long periods without blinking, irregular blinking, or eyes that don’t focus or move naturally.

-

Why it matters: Older deepfakes often had issues generating realistic eye behavior.

3. Poor Lip Syncing

-

What to look for: Lip movements that don’t match the spoken audio or poorly aligned speech.

-

Why it matters: Generating accurate phoneme-to-visual correspondence is technically challenging.

4. Lighting and Shadows

-

What to look for: Inconsistent lighting across the face and body, or shadows that don’t align with the environment.

-

Why it matters: GAN-generated faces sometimes fail to match the lighting dynamics of the original video.

5. Blurred or Flickering Edges

-

What to look for: Blurriness around the jawline, hair, or ears—especially during motion.

-

Why it matters: Many deepfakes struggle with edge fidelity during transitions or fast motion.

6. Asymmetrical or Inconsistent Facial Features

-

What to look for: Slight differences between the left and right sides of the face, inconsistent facial textures, or mismatched earrings/glasses.

-

Why it matters: Deepfake algorithms sometimes produce distorted or asymmetrical images.

7. Artifacts and Glitches

-

What to look for: Pixelation, ghosting, or strange distortions in specific frames.

-

Why it matters: Compression artifacts or misalignments often reveal synthetic media.

8. Voice Mismatches or Robotic Sound

-

What to listen for: Hollow, robotic tones, offbeat pacing, and lack of emotional expression.

-

Why it matters: Even advanced voice clones can miss the subtle emotional and contextual cues of natural speech.

How to Detect Deepfakes Using AI Tools

AI-powered deepfake detection tools use machine learning and computer vision algorithms to identify patterns and features that aren’t easily visible to the human eye. Here are key methods and tools:

1. Deep Learning-Based Detection Tools

A. Microsoft Video Authenticator

-

Uses AI to analyze still photos and video frames.

-

Assigns a confidence score indicating whether the content is likely manipulated.

-

Detects subtle fading or blending at face boundaries.

B. Deepware Scanner

-

Scans video and audio files for signs of manipulation using neural networks.

-

Supports mobile and desktop platforms.

C. Sensity AI

-

Enterprise-grade platform that uses proprietary AI to detect visual and audio deepfakes.

-

Monitors social media and video platforms in real-time.

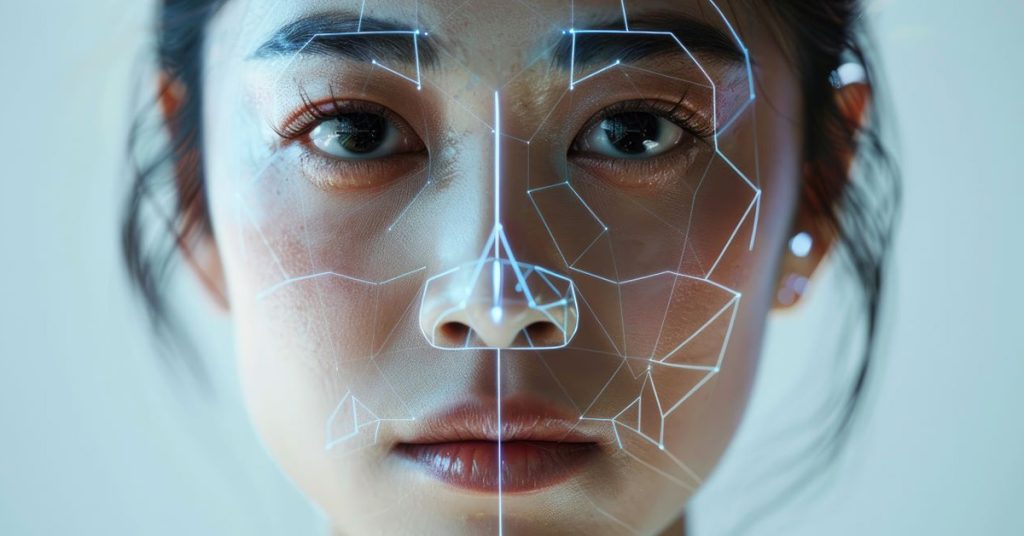

2. Forensic AI Techniques

A. Facial Inconsistency Detection

-

AI models examine minute inconsistencies in facial landmarks (e.g., pupil alignment, eyebrow symmetry).

-

Detects mismatches across video frames using temporal data.

B. Frequency Analysis

-

Deepfakes often leave frequency domain anomalies due to generative upsampling.

-

AI models perform Fourier Transform analysis to detect unnatural frequency patterns.

C. Motion and Texture Analysis

-

Neural networks trained to analyze movement fluidity, skin texture, and motion blur.

-

Captures inconsistencies that occur when faces are swapped.

3. Blockchain and Provenance Tracking

A. Content Credentials (Adobe / C2PA)

-

Embeds metadata and edit history into files.

-

AI tools can verify whether media was generated or edited using synthetic tools.

B. Truepic Lens

-

Provides image and video verification by embedding secure metadata at the time of capture.

-

Prevents deepfakes by tracing media authenticity back to source devices.

4. Voice Deepfake Detection Tools

A. Resemble Detect

-

Detects whether an audio file contains synthetic voice generated using AI.

-

Compares vocal cadence, tone, and prosody to trained baseline models.

B. Pindrop’s Deep Voice Detection

-

Uses acoustic analysis to detect deepfake audio in real time for call centers and financial services.

5. Browser Plugins and Mobile Apps

A. Amber Video

-

Flags suspicious content and provides context around potentially fake videos.

-

Highlights sources and suggests authenticity checks.

B. Hive Moderation

-

Integrates with content platforms to scan and remove deepfake videos in real-time.

Manual vs AI Detection: Comparison Table

| Feature | Manual Detection | AI-Based Detection |

|---|---|---|

| Accuracy | Varies by human skill and awareness | Higher accuracy with large datasets |

| Speed | Slower, requires frame-by-frame review | Fast and real-time processing |

| Scalability | Not scalable | Highly scalable across platforms |

| False Positives/Negatives | Higher | Lower (if trained properly) |

| Ease of Use | No tools required | May require software or APIs |

Current Real-World Cases of Deepfakes

Understanding real-world incidents helps contextualize the threat:

-

Financial Scam (2023): A Hong Kong-based company was defrauded of $25 million through a video call where deepfaked executives ordered a money transfer.

-

Political Manipulation: Deepfakes of U.S. and Indian politicians have gone viral, spreading false messages.

-

Celebrity Exploitation: AI-generated explicit content of actors has been distributed online, raising serious ethical and legal concerns.

These examples are not isolated events—they’re part of a growing trend that affects global trust in media and communication.

Technologies to Detect and Prevent Deepfakes

Detecting deepfakes is a complex but rapidly evolving field. Here are the most effective technologies being developed and deployed:

1. Deepfake Detection Algorithms

-

Use AI to analyze micro-expressions, lip-sync mismatches, lighting inconsistencies, and eye movement anomalies.

-

Examples: Microsoft’s Video Authenticator, Meta’s Deepfake Detection Challenge tools.

2. Blockchain and Digital Watermarking

-

Provenance tracking embeds metadata or blockchain records to verify the authenticity of media content.

-

Adobe’s Content Credentials project embeds origin and edit history in images.

3. Biometric and Liveness Detection

-

Face ID and iris scanners can verify user identity by detecting real-time interaction.

-

Liveness detection is especially useful in financial services and facial recognition systems.

4. AI-Based Voice Verification

-

Companies like Pindrop and Nuance develop tools to detect synthetic voice attacks.

-

AI can analyze vocal tones and breathing patterns to determine authenticity.

Best Practices for Individuals and Organizations

While technology is crucial, human awareness and process improvements are equally important.

For Individuals:

-

Double-check media: Be skeptical of sensational videos or messages from public figures.

-

Use reverse image search: Tools like Google Lens and TinEye can verify the source of visual content.

-

Install detection apps: Tools like Sensity.ai or Amber Video can help identify deepfakes.

For Organizations:

-

Train employees: Awareness programs should include deepfake detection and response drills.

-

Deploy deepfake detection tools: Integrate into content workflows or communication channels.

-

Set up verification protocols: For instance, require voice and facial liveness tests for financial transactions.

-

Zero-trust communication policy: Assume verification is required before trusting any media.

The Role of Government and Policy

Preventing deepfakes isn’t just a technological challenge—it’s a regulatory and legal one.

1. Existing Regulations

-

EU AI Act (2024): Requires clear labeling of synthetic content.

-

U.S. DEEPFAKES Accountability Act (proposed): Mandates creators to disclose altered content.

-

China’s Deep Synthesis Regulation: Demands all AI-generated content be watermarked and labeled.

2. Policy Recommendations

-

Global standards: International cooperation on labeling and authenticity metadata.

-

Platform accountability: Social networks must scan and remove harmful deepfakes.

-

Public education: Governments should fund media literacy campaigns to raise awareness.

Future Trends in Deepfake Prevention

As AI continues to evolve, so must our defenses. Here are emerging trends:

9.1. AI vs. AI Arms Race

Using generative AI to create deepfakes and using discriminative AI to detect them will continue to escalate. Companies like Google DeepMind are researching self-supervised systems that learn from evolving threats.

9.2. Multimodal Verification

Combining audio, video, text, and metadata to authenticate media across multiple dimensions.

9.3. Real-time Detection at Scale

Social media platforms are developing systems that detect and flag deepfakes within seconds of upload.

9.4. Decentralized Identity Verification

Solutions like Self-Sovereign Identity (SSI) frameworks are emerging to verify identity without depending on central authorities, making impersonation harder.

Conclusion

Deepfakes present one of the most insidious threats of the digital age, blending technology with deception in ways that challenge our perception of reality. In the era of generative AI, the only effective solution lies in a multi-layered strategy that combines technological tools, legal frameworks, educational initiatives, and individual vigilance.

Key Takeaways:

-

Deepfakes are enabled by generative AI and are growing in sophistication.

-

Detection technology is improving, but awareness and prevention are just as vital.

-

Organizations must implement clear verification protocols and employee training.

-

Governments need to enforce transparent policies and support tech innovation.

By staying informed, adopting the right tools, and fostering a culture of digital skepticism, we can mitigate the risks and ensure that AI remains a force for good—not deception.