How Do AI Systems Identify Duplicate Data?

How Do AI Systems Identify Duplicate Data?

A discussion of AI systems, such as comparing records in a database, and how these techniques can be used in conjunction with Salesforce.

You can simply identify whether two Salesforce records, or any other CRM for that matter, are duplicates by comparing them side by side. Even if you only have a tiny amount of records, say fewer than 100,000, sifting through them one by one and doing such a comparison would be nearly hard. Companies have developed numerous tools to automate such operations, but the machines must be able to distinguish all of the similarities and differences between the records in order to perform a successful job. We’ll look at some of the techniques used by data scientists to train machine learning algorithms to detect duplicates in this post.

How Can AI Systems Compare and Contrast Records?

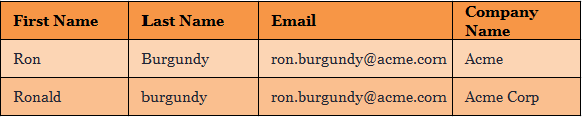

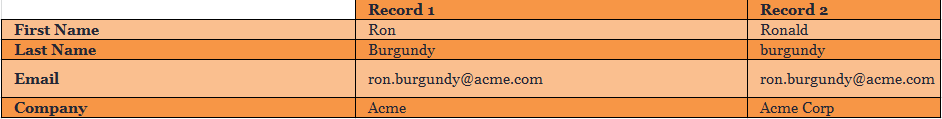

String metrics are a common instrument used by researchers. This is when you take two strings of data and return a value that is low if the strings are similar and high if the strings are dissimilar. What is the practical application of this? Let’s look at the two records below:

If a human were to examine these two records, it would be clear that they are identical. Machines, on the other hand, rely on string metrics to mimic human thought processes, which is what AI is all about. The Hamming distance, which quantifies the number of substitutions required to convert one string to another, is one of the most well-known string metrics. Returning to the two records above, for example, there would only need to be one replacement to change “burgundy” to “Burgundy,” resulting in a Hamming distance of 1.

There are a slew of different string metrics that measure the similarity of two strings, and what distinguishes them is the operations they let. The Hamming distance, for example, is a string metric that only enables substitutions, which means it can only be used on strings of equal length. The Levenshtein distance, for example, allows for deletion insertion and substitution.

How Can All of This Be Used to Dedupe Salesforce?

There are a couple of ways an AI system can approach Salesforce deduplication. One of the ways is the blocking method, which is illustrated below:

Such blocking methodology is what makes this approach scalable. It works like this: whenever you add new records to Salesforce, the system will automatically combine records that appear to be “similar.” This could be something as simple as the first three letters of a person’s first name, or it might be anything else.

This is advantageous since it decreases the number of necessary comparisons. Consider the following scenario: you have 100,000 records in Salesforce and want to transfer a 50,000-record Excel spreadsheet. Traditional rule-based deduplication software would require 5,000,000,000 comparisons to compare each new record with existing records (100,000 x 50,000). Consider how much time this would take and how much the risk of an error would increase. It’s also important to remember that 100,000 Salesforce records is a relatively small quantity. Many organizations have hundreds of thousands, if not millions, of records. As a result, when attempting to accommodate such models, the standard technique is just not scalable.

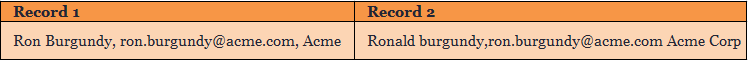

The other option would be to compare each field individually:

After the system has grouped “similar” records together, it will examine each record field by field. All of the string metrics we discussed earlier will be used in this step. Furthermore, the system will assign each field a specific “weight” or relevance. Let’s pretend that the “Email” field is the most important in your dataset. You can either change the algorithms explicitly, or the system will learn the right weights when you classify records as duplicates (or not). This is referred to as Active Learning, and it is preferred since the system can accurately calculate the importance of one field over another.

What Are the Advantages of the Machine Learning Approach?

The most significant advantage of machine learning is that it does all of the work for you. The previous section’s Active Learning feature will automatically apply all of the relevant weights to each field. This means that no complicated setup procedures or regulations are required. Consider the following scenario. Assume that one of the sales reps discovers a duplicate and reports it to the Salesforce admin. The Salesforce administrator will then proceed to set a rule to prevent such duplicates in the future. This process would have to be repeated over and over again every time a new duplicate is discovered making such a process unsustainable.

Also, keep in mind that Salesforce’s built-in deduplication is also rule-based; it’s just quite limited. For example, you can only merge three records at a time, custom objects are not supported, and there are numerous additional restrictions. Machine learning is simply the more intelligent option, as rule development is merely automation, whereas AI and machine learning attempt to replicate the human reasoning process. This article delves deeper into the differences between machine learning and automation. Instead of solving the entire process, choosing a deduplication product that simply enhances Salesforce’s functionality makes no sense. This is why the machine learning approach is the best way to go.

Related Articles:

https://dzone.com/articles/how-do-ai-systems-identify-duplicate-data